Agent Experience (AX) Design: A Framework for Human–Agent Systems in OutSystems

- Kristi Kitz

- 14 hours ago

- 6 min read

Designing for AI Agents: Focusing on the How

Recently, my colleague wrote about how AI is changing user interaction in complex systems.

You can read that article here: https://www.acceleratedfocus.com/post/5-considerations-on-how-ai-rewrites-the-rules-of-user-interaction-in-complex-systems

This article picks up where that one left off to focus on the how. Specifically, how we think about designing for AI agents inside real business processes. Not superficial chatbots, but actual workflows where people, systems, and decisions are complex and already tightly integrated.

We call it AI Agent Experience (AX) Design. Organizations engage us to help them design where and how agents fit into core business workflows, leveraging OutSystems before committing to development. The outcome is a tangible and shared understanding of roles and responsibilities across humans, agents, and systems.

This article outlines the framework we use in that work.

What We Mean by Agent Experience (AX)

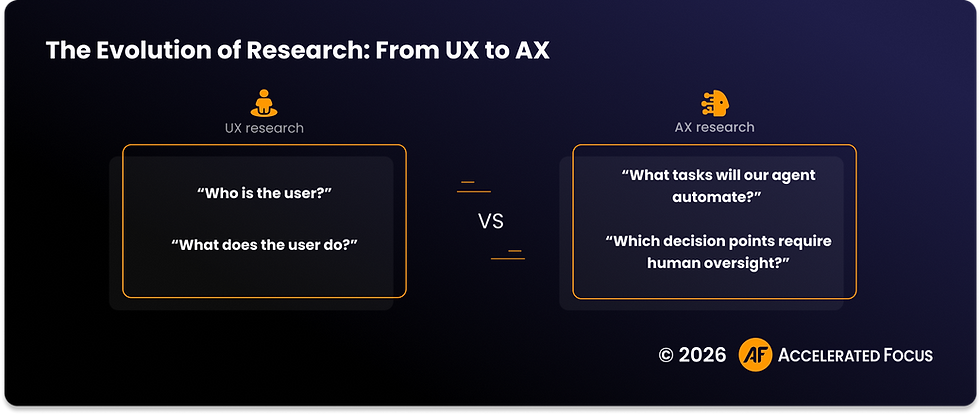

This term describes something different than traditional user experience design. This is an emerging concept, and there are varying definitions, but here is mine:

User experience focuses on how people interact with a system.

Agent experience focuses on how an AI participates in the system.

That participation raises questions that standard UX patterns do not fully cover. On the surface, AI experiences often look simple, but what users see is only a small part of what has actually been designed.

Much of this work is invisible. Thoughts and decisions have to be made about what the agents' roles are, their boundaries, where they can act on their own, and where to involve humans.

In this model, design is not mainly about interfaces. It is about shaping the workflow, defining roles, and deciding how people and systems work together. I believe this was always true, but it matters now far more than it did before.

How We Approach Designing Agent Experiences

There are many ways to break down this work, but this is our model, as represented by four stages. The main goal is to focus on the business needs and be deliberate about where and how AI is introduced, rather than jumping straight to solutions.

Identify the right entry point

Understand the current state

Determine agent fit

Design the human-agent process

1. Where do we start?

Organizations don’t all enter this work from the same place.

Sometimes there’s a clear problem that we think AI can solve. Sometimes there’s an existing process or application that feels like a natural place to explore AI. Sometimes the starting point is broader, like a business objective, a leadership directive, or (as one often hears) pressure to “do something with AI” without clarity on what that actually means.

At this stage, it is less about AI capabilities and more about intent. We may ask questions such as the following:

Goals: What business outcome are we trying to change? (eg. cost, speed, quality, risk, customer experience, employee workload)

Use case: Given the answer to the first question, where in the organization/ process/ application is this most felt today?

Success criteria: What does “better” actually mean for this process? (Faster decisions, fewer errors)

Metrics: How will success be measured?

Feasibility: An important one not to forget - what constraints might exist? (ie regulatory, policy, risk tolerance, data availability, etc)

2. Understand the work as it is today (current state)

Before introducing agents, we must understand the core business process.

This step looks a lot like traditional UX research, so it should feel familiar. Depending on the context, this work may include: stakeholder and 1-1 user interviews; reviewing existing process artifacts; mapping workflows; and application walkthroughs with end users.

This body of work is well known, and there are already many resources online about this, so it doesn’t require further elaboration.

What changes?

This step is non-negotiable groundwork (I would argue in any product design initiative). For agentic applications, the methods are similar, but the tolerance for ambiguity is lower.

In systems where agents participate in the flow of work, assumptions about roles, inputs, and decisions are no longer absorbed by people, they are built into how the system behaves. Gaps that were previously handled informally can become fixed points in the workflow.

The purpose of this step is to surface and resolve those assumptions. The focus and mental model shifts.

3. Determining agent fit

We don’t design for “the agent” as a single entity. In practice, agents take on specific roles, each responsible for a narrow part of the workflow. Multiple agents may work together across a single process, but each one should have a clear job.

Define agents by role, not capability

Many frameworks classify agents based on how they reason or learn (reactive, goal-based, learning agents, and so on). Those distinctions can be useful technically, but they are less helpful for design.

For AX work, it is more practical to define agents by where they sit in the workflow and what responsibility they carry. The question is: which part of the work does it support?

How agent roles emerge

Agent roles are not chosen upfront. They emerge from the structure of the work.

The opportunities become visible in the current-state analysis. For example:

where is time spent preparing or organizing information?

what are repeated tasks that exist mainly to move data?

what decisions require assembling context from multiple systems?

manual checks that compensate for incomplete or inconsistent inputs

If a step requires effort but little judgment, it may be a candidate for agent support. If it requires judgment, the role of the agent is to prepare, contextualize, or clarify but not decide.

Once agent roles are introduced, the human role in the workflow inevitably changes.

Example roles (illustrative)

In an insurance claims process, common agent roles might include:

Intake agent: structures incoming claim information

Context agent: gathers relevant history and policy details

Preparation agent: summarizes and flags gaps before review

Routing agent: sends work to the appropriate next step

Recommendation agent: suggests options without taking action

Labels may vary, but I feel what matters most is clarity of responsibility.

Orchestration and scope

Once roles are defined, the focus shifts to how they work together. Some agents run in sequence, some in parallel, and some hand work off based on what they find. This coordination is orchestration. There are many patterns for this.

Here is an excellent resource from Microsoft, enumerating some common orchestration patterns:

Here are a couple of examples from that article.

Sequential orchestration example

Agents work in a defined order, with each step building on the output of the previous one.

Hand-off orchestration example

An agent routes work to the right specialist or human, based on what’s needed in the moment.

A reminder on starting small:

While we are discussing orchestration, a reminder that not every workflow needs multiple agents. Determining agent fit is about deliberate placement. Start small and expand only where it proves useful.

4. Designing the future state

Once the goals are clear, the current state is understood, and potential agent roles are surfacing, we design what the process should be. This is where the system’s behavior, and delineation of roles between human and system is made explicit.

Service blueprint

For this, we leverage a service design framework. One primary artifact at this stage is a future-state agentic service blueprint. It shows how people, agents, and systems work together not as isolated entities, but as a single flow of work.

The blueprint is not documentation, but rather a design tool. It forces alignment on how the system is supposed to operate and, most importantly, clarifies the roles and interactions and hand-off points between the user and agents.

At a minimum, it makes four things explicit:

Frontstage human activity: the moments where people are involved: reviewing information, making decisions, responding to recommendations, or taking action

Backstage agent activity: what agents do outside the user’s direct view gathering context, preparing inputs, coordinating steps

System behavior: what existing systems do in parallel: retrieving data, enforcing rules, updating records, and triggering downstream processes

Process: how work progresses through the process, including where it branches, transitions between human and agent, and requires human attention

Designing at this level forces clarity about responsibility. It makes roles clear, the transitions visible, and prevents teams from jumping straight to screens without agreeing on how the system should actually behave.

Interface design

Interfaces still matter, but they are not the starting point. Screens and prototypes are used selectively to make specific moments in the process tangible. They exist to support the workflow defined in the blueprint.

The full process brought together

This diagram brings the four steps together into a single, end-to-end view of the work.

From framework to delivery with OutSystems

We are an OutSystems delivery partner, and this framework was designed based on our experience and approach in that context.

This type of design work does not succeed when delivery forces decisions to be locked in too early. Agent roles become too broad, and workflows become solidified before they’ve been validated.

This framework is structured to avoid that. It assumes an environment where workflows, integrations, and interfaces can be changed as the system is built. Using OutSystems, we can implement narrowly scoped agent roles, observe how they affect real work, and adjust without reworking the entire system.

This allows us to build and adjust the system without abandoning the original design intent.

AI Agent Experience Design as a service

We offer this work as an AI Agent Experience Design service.

The framework described in this article reflects how we work with organizations that want to design human–agent systems deliberately, before committing to development.

Thank you for reading 😊.

Comments